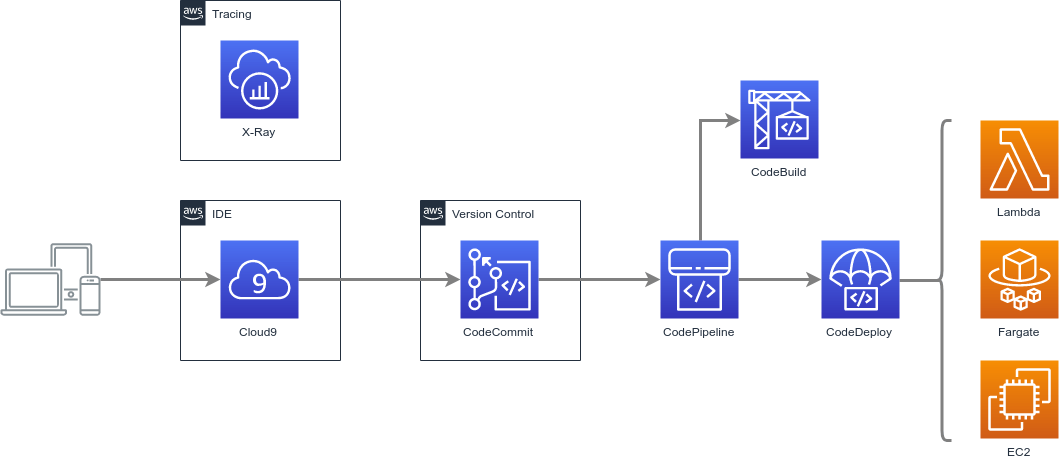

DevOps

Unpacking my ‘go-to’ architecture for high-velocity application delivery.

This architecture reflects my current technical acumen and business philosophies:

- Customer is most important person in the room

- Do more with less

- Secure by design

- Learn & apply

- Follow the data

- Simplicity accelerates

I use it within my own projects — and as a baseline for related consulting work.

It’s an ideal — secure, performant and modular — platform for delivering:

- CI/CD pipelines,

- Version control, and

- Development environments

Inspired — and perpetually evolving — from AWS reference architectures and DevOps best-practices:

- Microservice patterns

- Infrastructure as Code (IaC)

- Communication & collaboration

And continuously validated against the AWS Well-Architected and NIST Cybersecurity frameworks.

Push-Button Deployment

Maintained in a library of CloudFormation templates and deployable on-demand — as individual modules or end-to-end.

Design Considerations

- Why not TerraForm? CloudFormation covers everything required in a universal format (

YAML/JSON), so there is no compelling reason to take on the added costs and complexity of a 3rd-party tool - Why AWS? In a word…ecosystem. The breadth and quality of services, coupled with their integration, provide the most compelling business case. And after working with nearly all of AWS’s services over the past 10+ years — and applying their published best practices and reference architectures — AWS has earned my trust…and my business. I’ve also used GCP and Azure quite a bit, but have always ended up back on AWS due to performance or architectural reasons.

The Bigger Picture

This architecture also fills a key role in a more comprehensive cloud strategy:

So let’s dive in…

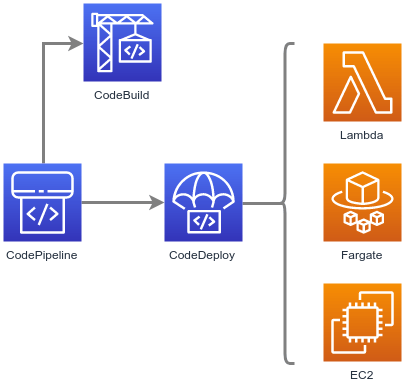

Core Components

Laying the groundwork to rapidly build and deliver software products.

- CodePipeline — Orchestration of software release processes using continuous delivery

- CodeBuild — Build and test code

- CodeDeploy — Automate code deployments (Blue/Green, etc.) to containers, functions, virtual machines, etc.

- Simple Storage Service (S3) — Store build artifacts

- Lambda — Function-based compute workloads

- Elastic Compute Cloud (EC2) — Virtual machine-based compute workloads

- Elastic Container Service (ECS) — Container-based compute workloads

- Elastic Container Registry (ECR) — Container registry

- Key Management Service (KMS) — Key management for encrypting data at-rest on S3

- Virtual Private Cloud (VPC) — Network-level isolation of resources and traffic (including Security Groups, NACLs, NAT, Route Tables, etc.)

- CloudWatch — Collect and store logs from Lambda

Simplifies the provisioning and managing infrastructure, deploying application code, automating software release processes, and monitoring application and infrastructure performance.

Design Considerations

- Why not Jenkins, Ansible or Travis? Deep integration with other AWS tools, CodePipeline provides a more compelling business case.

- Why not Chef, Puppet or Salt? If a formal configuration management tool is required to support a specific environment, my go-to is OpsWorks (Chef). I really like the other tools, but OpsWorks simply provides the most compelling business case.

Expansion Modules

With this solid foundation in place, we can easily enhance functionality using a variety of add-on modules:

- Version Control

- Development Environment

- Debug/Tracing

Let’s dive a little deeper into those modules…

Version Control Service

This module provides a source code control service.

- CodeCommit — Store Code in Private Git Repositories, encrypted git-based versioning

Increasing the speed and frequency of development lifecycles.

Design Considerations

- Why not GitHub? Deeper integration into pipeline, more granular control over permissions (i.e. integration with IAM), and notifications (i.e. integration with SNS)

Development Environment

This module adds an Integrated Development Environment (IDE) for software development.

- Cloud9 — Cloud-based IDE for writing, running, and debugging code

Cloud-based software development; dedicated for each developer.

Debug/Tracing Service

This module adds an application tracing platform for debugging/troubleshooting.

- X-Ray — Analyze and debug applications

Provides a detailed view into how the application functions — end-to-end — for root cause analysis, etc.